Life is full of dilemma and tough choices for our greatest enjoyment. Well, some might be annoying to resolve. So I present to you the contribution from machine learning in the direction of relieving ourselves the burden of having to make choices in our lives.

You probably have played a popular game named "Would you rather" in which two options are considered and only one should be chosen. It can be between two equally unwanted things: would you rather "lose the ability to feel emotions" or "be physically paralyzed". It can be between two super abilities: would you rather "control the elements" or "control time". And so on. The best ones are those that makes the choice so hard, you can't even decide. But have no fear, algorithms might help you there.

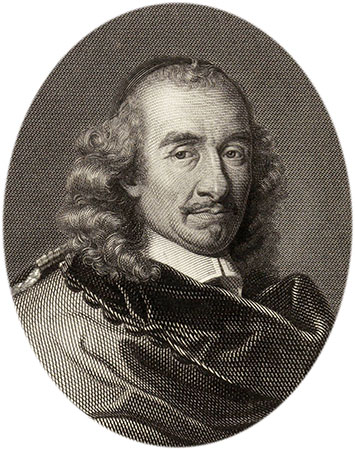

As a fun project for testing out word embeddings, I implemented a neural network which choose between two given statements and called it Pierre Corneille Bot, in reference to the french dramaturgist (1606-1684), well-known for putting his characters in front of undecidable dilemmas. In this article I will go through the steps for realizing this project.

The dataset

I first built a dataset of "would you rather" style questions. But to turn this into a supervised learning problem, I scraped the data from either.io website, in which you can actually see the vote count of people. On this website, you have a "blue" choice and a "red" choice, and when you choose, the vote count is displayed.

The website is lacking an API or some way to retrieve its database, so I scraped it using python library selenium, which lets you automate a web browser by performing action chains. This code will iterate over the questions on either.io.

import time

from tqdm import tqdm

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.action_chains import ActionChains

url = "http://either.io/"

browser = webdriver.Firefox()

browser.get(url)

act = ActionChains(browser)

for i in tqdm(range(10000)):

act.send_keys(Keys.RIGHT).perform()

time.sleep(2)Setting a time.sleep(2) is a hacky way to deal with latency from the web browser, waiting for elements to be displayed.

The dataset I collected has 1343 "Would you rather" dilemmas.

The model

With the dataset ready, the supervised task becomes simple: predicting the ratio of blue votes over red ones.

def x_percentage(x, y):

'''

Percentage of x relative to y

'''

return x / (x + y)A neural network predicts this ratio and updates its parameters with gradient descent when training on the dataset. Technically, I used a siamese network with LSTM on top an word-level embedding layer frozen to GloVe embeddings.

def neural_net():

net = Sequential()

net.add(embedding_layer) # embedding layer frozen to GloVe embeddings

net.add(Conv1D(128, 5, activation='relu'))

net.add(MaxPooling1D(5))

net.add(Conv1D(128, 5, activation='relu'))

net.add(MaxPooling1D(5))

net.add(Conv1D(128, 5, activation='relu'))

net.add(GlobalMaxPooling1D())

net.add(Dense(128, activation='relu'))

return netThe model is quite small and trains fast. Even on my old CPU laptop one epoch is done in about 12 seconds.

Epoch 1/20 2686/2686 [==============================] - 13s 5ms/step - loss: 0.1275 - acc: 0.0000e+00 Epoch 2/20 2686/2686 [==============================] - 12s 4ms/step - loss: 0.1165 - acc: 0.0000e+00 Epoch 3/20 2686/2686 [==============================] - 12s 4ms/step - loss: 0.0974 - acc: 0.0000e+00 Epoch 4/20 2686/2686 [==============================] - 12s 4ms/step - loss: 0.0876 - acc: 0.0000e+00 Epoch 5/20 2686/2686 [==============================] - 12s 4ms/step - loss: 0.0828 - acc: 0.0000e+00 [...]

We've got some intense learning happening here.

Pitfalls

I ran into various difficulties to implement this project and list here solutions I found to these:

- Blue/red choices should be mixed when neural net is training, else it overfits to a specific answer

- Use a sigmoid activation function on the output layer, instead of a tanh function, else the neural net choose to never decide (prediction close to 0). It intrigued me, and I will welcome any insight on this!

Testing

The bot quickly overfits to the dataset, as it is small (only a few thousands of samples). On new dilemmas, it tends to predict 50-50 value and cannot really decide. But it depends on cases, and I got interesting outcomes for some, where it was able to make arguable decisions. I noticed the system tends to be more decisive on dilemma with long-sentence options and output 50-50 decisions when options are short sentences, or even words. See some examples here.

| Option 1 (blue) | System output | Option 2 (red) |

|---|---|---|

| live | [0.5018624] | die |

| Becoming the president of the United States | [0.35958546] | Having asthma every time you talk to someone |

| Spend a day in the North Pole | [0.50490034] | Spend a day in the Sahara Desert |

| Be stalked by a demon for three days then die | [0.8209515] | Be stalked by a ghost for your entire life |

| Go everywhere barefoot | [0.31405994] | Wear a ski suit all the time |

| Swim 300 meters through dead bodies | [0.51103145] | Swim 300 meters through shit |

| Have a cat with a dog’s personality | [0.49127114] | Have a dog with a cat’s personality |

| Believe everything you’re told | [0.6322648] | Lose the ability to lie |

Conclusion

You can have fun with Pierre Corneille Bot and try it on your toughest dilemma in live here: https://pierre-corneille-bot.herokuapp.com/ I am also releasing the source of this project under MIT license, you can check the code on my github. I'd be happy to hear your feedback on the bot!