I stumbled upon an article using neural networks that guess passwords. It shows an original application of Generative Adversarial Networks (GAN).

What are GAN?

Generative Adversarial Networks are three words that have been used a lot during the past few years in Artificial Intelligence research. It refers to neural networks that have been trained in the adversarial framework. A neural network is a function with many parameters that can be optimized from assessing the errors the network makes from the expected output, or ground-truth. Automatic generation, however, has no such thing as "ground-truth" output to compare with the network's output, but instead a ground-truth distribution of samples considered as real.

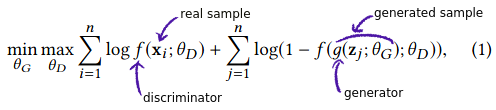

When training the network, two networks are competing with each other in a minmax game. A generator network generates samples which are rated as fake or real by a discriminator network. The discriminator is given real samples from the dataset as well as fake samples generated by the generator. It is trained to discriminate fake samples from real ones. However, the generator gets better and better at generating convincingly realistic samples so the task becomes harder and harder for the discriminator, to the point where it cannot tell the difference between generated and real samples.

Loss function used in GAN training. Generator and discriminator are duelling each other in minimizing/maximizing the same function.

There are applications of generative models in image editing, text-to-image generation, music generation.

PassGAN uses an improved version of GAN, overcoming major difficulties of applying GAN to text generation: Improved Wasserstein GAN (or IWGAN). IWGAN notably uses the Wasserstein distance (also called earth-mover distance) to compute errors of the network, which is better suited for evaluating distance between two texts.

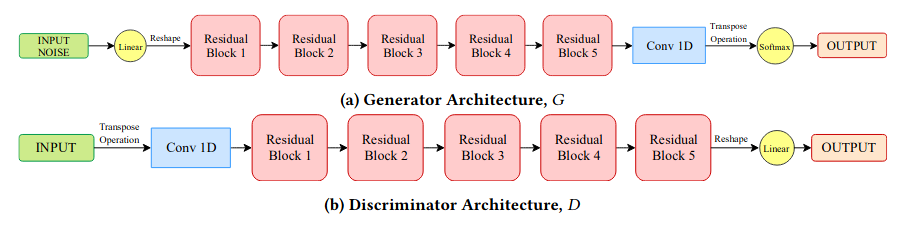

The architecture of PassGAN follows a usual convolutional network architecture with cascade of residual blocks making sure to keep the gradient propagating into deep layers of the network. We can also note the popular use of 1D-convolutions in processing textual data.

Architecture of PassGAN

Training to generate human-like passwords

PassGAN is a password generator used for generating realistic passwords. By realistic I mean passwords that you and me could actually come up to when we create our account on whatever website. It could be a social network account, or it could be an online banking account.

Password cracking is a fastidious task which involves testing a lot of different combinations. However, there are combinations more probable than others. For example, you might use a password which makes sense to you, like bluesky1991, with 1991 being your birth date rather than something cryptic like 9y2[V^1>e_yfYE,r5q:e'w!cv. This is the assumption of cryptoanalysis techniques which try to crack passwords. They try out the most likely passphrases first.

There already exists plenty of password recovery tools such as HashCat (https://hashcat.net/hashcat/), Aircrack-ng (https://www.aircrack-ng.org/), which implementing most advanced password cracking strategies, such as mask attack. But these strategies rely on strong assumptions, such as fixed password length. On the opposite, PassGAN approach can virtually generate passwords of any length.

How good is PassGAN at guessing your password?

The researchers used RockYou dataset to train PassGAN, splitting the dataset into a train set and a test set. They also evaluated on a dataset of leaked LinkedIn passwords. Using their PassGAN, they generated many passwords, and looked how many matched real passwords from the test sets. 34.2% of real LinkedIn passwords were found to match generated ones. Of course, PassGAN needs to generate billions of passwords to achieve this score, but the required number of generated passwords is relatively few, reducing substantially the required amount of tries compared to an attack which would test all possibilities.

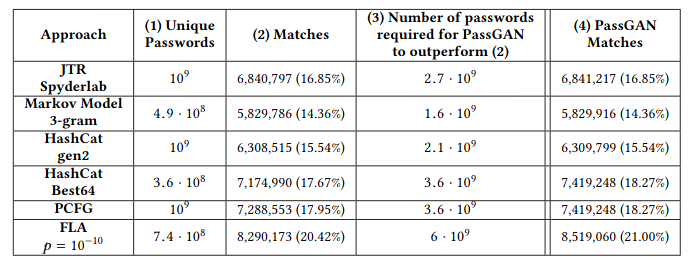

Comparison table of results for PassGAN and other techniques.

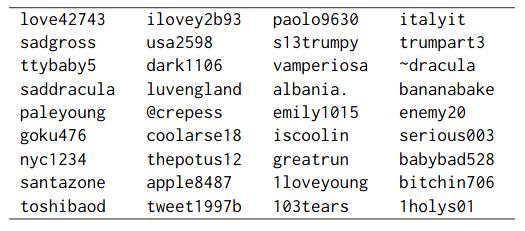

Due to the expressiveness of Wasserstein GAN (check out this nice blog post) used by PassGAN, it is interesting to note that even generated passwords that did not match any in the dataset still look like valid candidates.

PassGAN generation samples that did not match any password in the test set.

A drawback of PassGAN noted by the researchers is it requires a lot of generation to obtain a similar amount of matches compared to other methods, that is up to double the number of generations than other strategies when they require about a billion.

PassGAN can also be used in conjunction to other strategies. It can guess pretty well passwords that could not be guessed by other strategies, making a huge jump up to 73% of matches overall.

The main power of PassGAN is in its expressiveness. Virtually, it can generate any password, regardless of its size. So such methods could be ridiculously powerful with prior knowledge on the passwords to crack. Incidentally, that is a proposition of future work by the researchers, that is using conditioning, a popular technique to level up data generation models.

Your password is in danger

Now that anxiety is at its climax, I can sell you my revolutionary product for good price! More seriously, with the advances of password cracking, it is fair to say our way to choose password should advance as well.

It is easier to remember one intricate password rather than many, and having many simple or similar passwords makes you vulnerable to password cracking attacks. So the solution is to set a global password to an encrypted database of passwords. Encryption can make your password database virtually impossible to crack, or this would require at least several lifetimes to crack for electronic computers. An example of such tools is pass (https://www.passwordstore.org/), a simple command-line tool using GPG encryption to securely store your passwords.

Websites themselves also implement new ways to authenticate, such as two-factor authentication, or using fingerprint, etc.

References

Link to PassGAN paper: https://arxiv.org/abs/1709.00440 Article on my website